Overview

In this lab, you will be introduced to the Web performance and Load testing capabilities provided in Visual Studio Enterprise 2017. You will walk through a scenario using a fictional online storefront where your goal is to model and analyze its performance with a number of simultaneous users. This will involve the definition of web performance tests that represent users browsing and ordering products, the definition of a load test based on the web performance tests, and finally the analysis of the load test results.

Prerequisites

-

This lab requires that your Visual Studio 2017 instance have the Web performance and load testing tools installed from the Individual components tab of the Visual Studio Installer.

-

This lab requires you to complete tasks 1 and 2 from the prerequisite instructions.

-

This lab requires Microsoft Excel.

Exercise 1: Web Application Load and Performance Testing with Visual Studio 2017

Task 1: Recording web tests

-

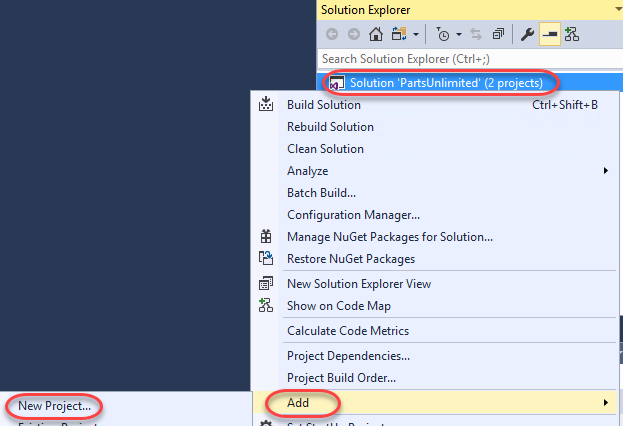

In Solution Explorer, right-click the solution node and select Add | New Project.

-

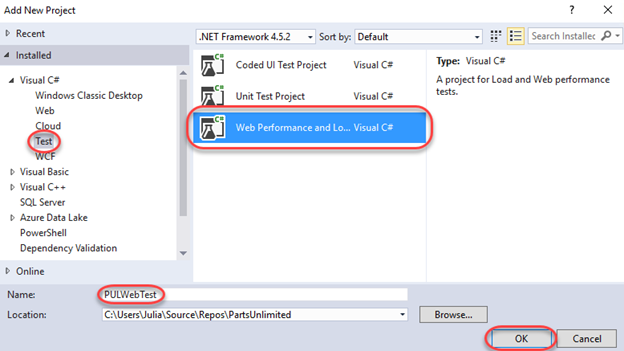

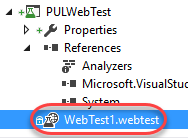

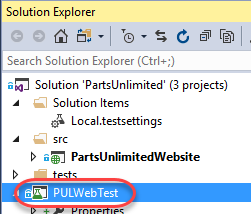

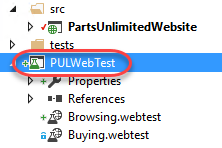

Select the Visual C# | Test category and the Web Performance and Load Test Project template. Enter a Name of “PULWebTest” and click OK.

-

Once the project has been created, select Debug | Start Without Debugging to build and launch the site locally.

-

Return to Visual Studio, leaving the browser window open to the local version of the site.

-

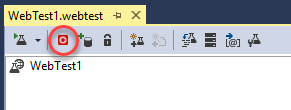

The default (and empty) WebTest1.webtest file will be open as a result of the earlier project creation. Click the Add Recording button to begin a recording session.

-

If asked to enable the extension in your browser, click to allow it. Then close the window and restart the recording session from Visual Studio using the previous step.

-

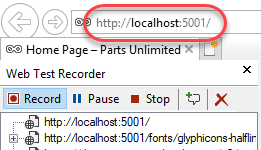

In the newly opened browser window, navigate to http://localhost:5001/. This is the URL of the project site.

-

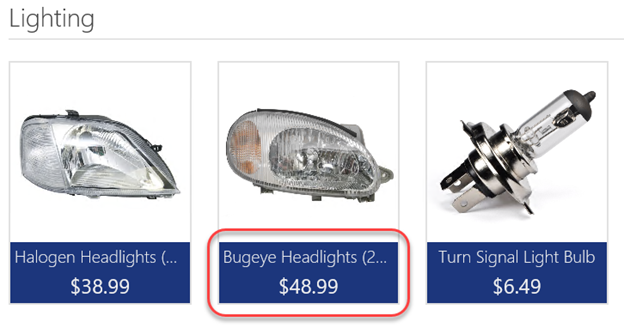

Click the Lighting tab.

-

Click the Bugeye Headlights product.

-

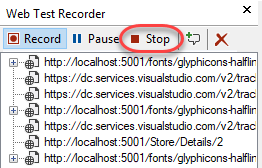

Continue browsing the site to generate more traffic, if desired. However, do not log in or add anything to your cart. When ready to move on, click Stop in the Web Test Recorder.

-

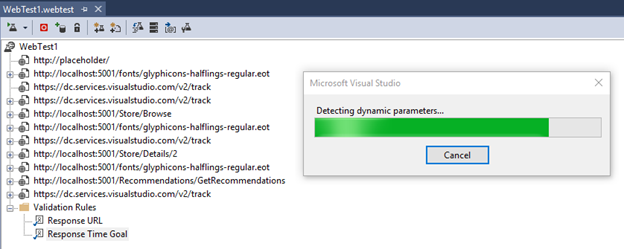

After stopping the recording session, Visual Studio will analyze the recorded traffic to attempt to identify dynamic parameters that can be abstracted for dynamic configuration later on.

-

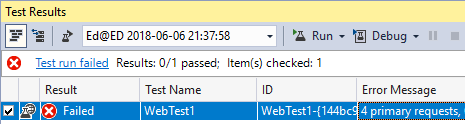

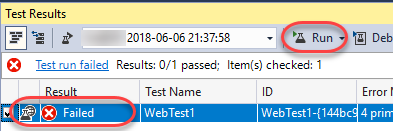

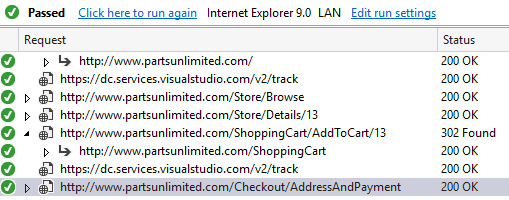

An initial test will also be run. However, it will likely fail based on some requests for Application Insights resources that were not configured in this starter project.

-

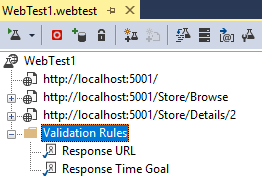

In the web test, select each step for the placeholder URL (if there) and any tracking requests and press the Delete key to remove them one by one. You can also delete steps to download resources, such as fonts. When you’re done, you should only have three requests: the site root, /Store/Browse, and /Store/Details/2.

-

In the Test Results window, select the failed test and click Run.

-

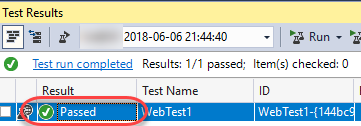

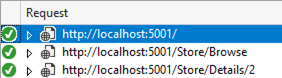

Since the browsing was basic, it should easily pass. Double-click the successful run to load its details.

-

Select different requests throughout the test run to see details on how it performed, how large the payloads were, and so on. You can also dig into the specific HTTP requests and responses.

-

Close the test results.

Task 2: Working with web tests

-

From Solution Explorer, open WebTest1.webtest if it’s not already open.

-

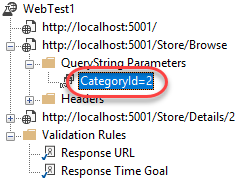

Locate the step that navigates to /Store/Browse and expand QueryString Parameters. Note that the parameters have been extracted so that you can easily swap in different values to test with other values.

-

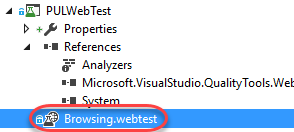

In Solution Explorer, rename WebTest1.webtest to Browsing.webtest.

-

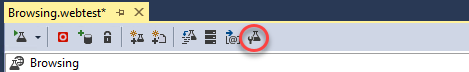

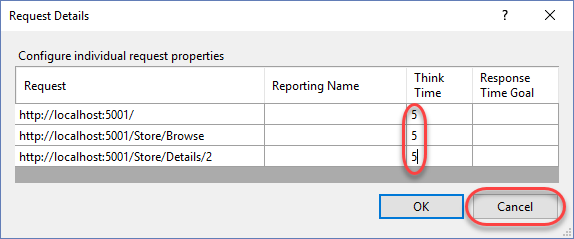

In Browsing.webtest, click the Set Request Details button.

-

This dialog enables you to configure the Think Time to use for each step. Think Time simulates the time an end user would stop to read the current page, think about their next action, etc. Here you can manually set how much time to use for each step, as well as to set goals for response time on each navigation. Click Cancel.

Task 3: Recording sophisticated tests

-

For the rest of this exercise we will need a registered site user. Return to the original browser window opened when running the project.

-

Click Register as a new user.

-

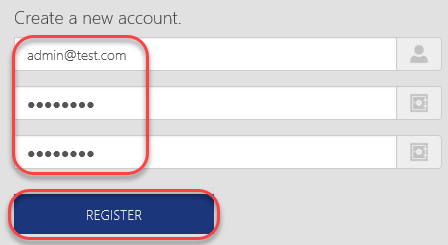

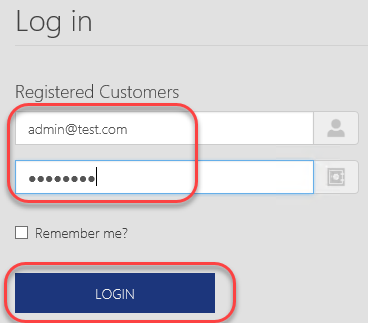

Register with the email admin@test.com and password P@ssw0rd.

-

Now we can add a more sophisticated test that involves completing a purchase. Return to Visual Studio.

-

In Solution Explorer, right-click the PULWebTest project and select Add | Web Performance Test.

-

Navigate to http://localhost:5001/ like before.

-

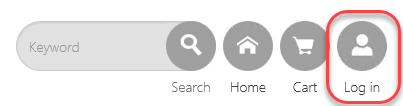

Click Log in.

-

Enter the email admin@test.com and password P@ssw0rd. Click Login.

-

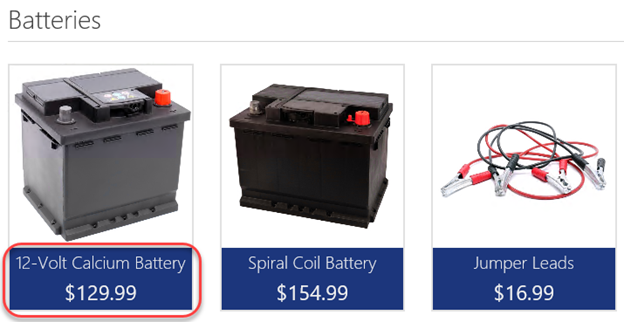

Click the Batteries tab.

-

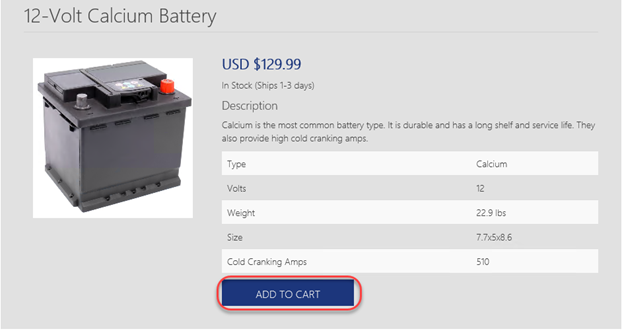

Click the 12-Volt Calcium Battery.

-

Click Add to Cart.

-

Click Checkout.

-

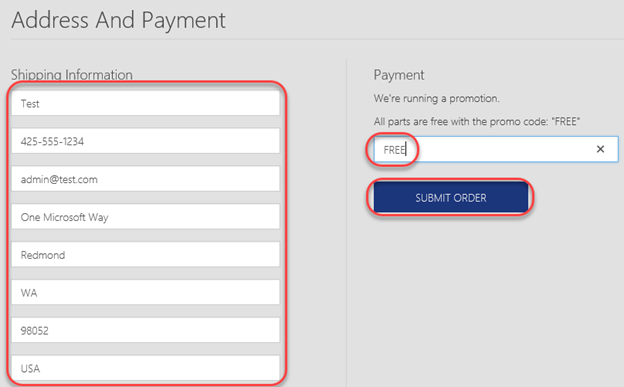

Enter shipping information (details are not important) and be sure to use the Promo Code FREE. Click Submit Order.

-

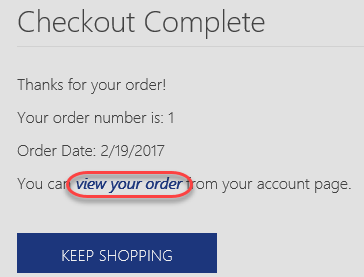

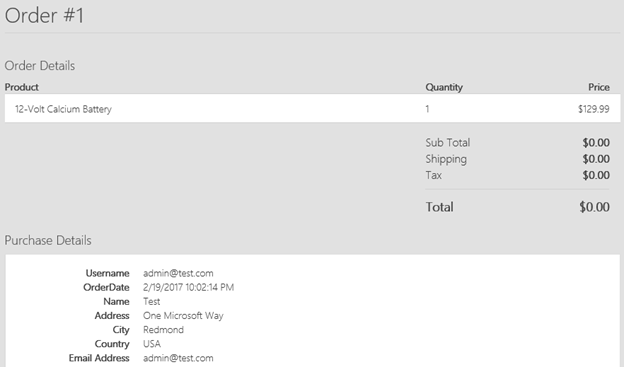

On the checkout page, click view your order to review the order details.

-

The details should all match what you entered earlier.

-

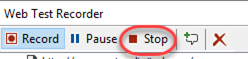

Click Stop in the Web Test Recorder to continue.

-

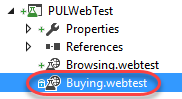

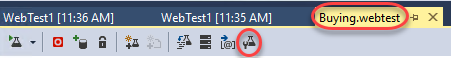

In Solution Explorer, rename WebTest1.webtest to Buying.webtest.

-

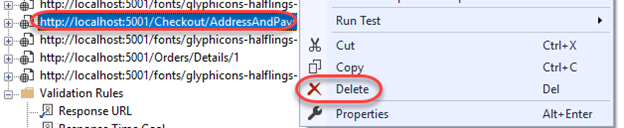

Like earlier, delete any steps that aren’t made to the http://localhost:5001/ site or are to resource files, like .EOTs.

Task 4: Viewing web test result details

-

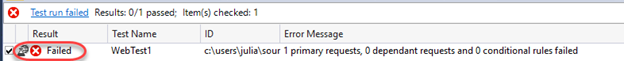

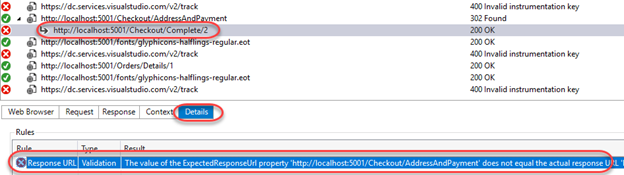

As before, Visual Studio will process the recorded steps and then attempt to execute the test. Double-click the failed test run to view it.

-

Locate the failed step after the checkout completes and click it. It should be the redirect that occurs immediately after the order is submitted. Click the Details tab to confirm.

-

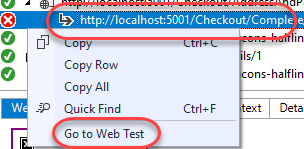

This failure makes sense. In our original test, we checked out and received an order number embedded in the redirect URL. However, since the next test run would presumably result in a different order number, the redirect received would have a different URL from the one expected. Right-click the failed step and select Go to Web Test to review that step in the process.

-

Depending on your test goals, you might want to have the test to dynamically accept the order number returned and track it throughout the remainder of the test. However, for the purposes of this lab we will simply delete that step to avoid the failure. Right-click the step and select Delete.

-

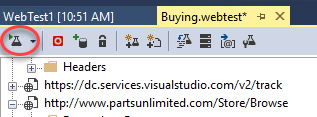

Click the Run Test button again to confirm the test passes now.

-

The test should pass as expected.

-

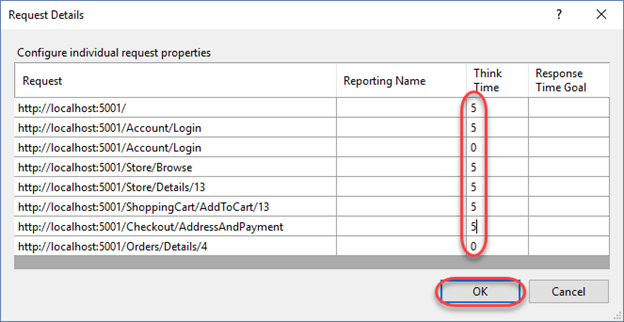

Return to Buying.webtest and click the Set Request Details button.

-

Since we’re going to use this test as part of a load testing run, let’s update the Think Time columns with some realistic numbers. Try to have the whole run use around 30 seconds of total think time. For example, you may want the obvious navigations to each have 5 seconds of think time while all the other requests have 0. Click OK when done.

Task 5: Adding a load test

-

Now that we have two tests that cover common scenarios, let’s use them as part of a load test to see how the system might perform with a variety of simultaneous users. In Solution Explorer, right-click the PULWebTest project and select Add | Load Test.

-

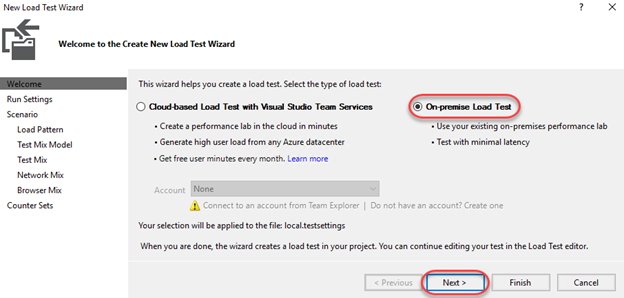

There are two options for load testing: cloud-based and on-premises. The cloud-based load testing hosted by Azure DevOps offers a massively scalable environment to truly stress test your system. However, in this scenario we’re going to select On-premise Load Test to contain testing entirely within the VM. Click Next.

-

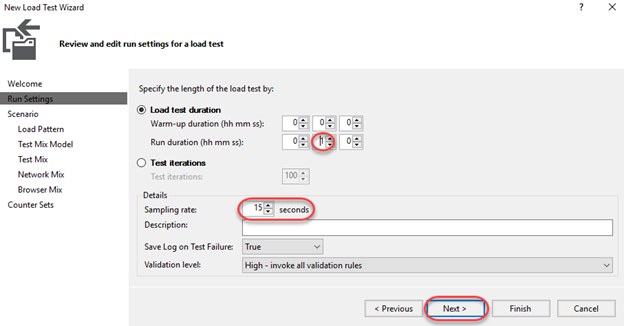

The Run Settings enable you to indicate whether you’d like to run the test for a specified duration or if you’d like to run for a certain number of iterations. In this scenario, select Load test duration and set the Run duration to 1 minute. You can also configure a variety of details for testing, such as the Sampling rate for collecting data. Keep this at 15 seconds. Click Next.

-

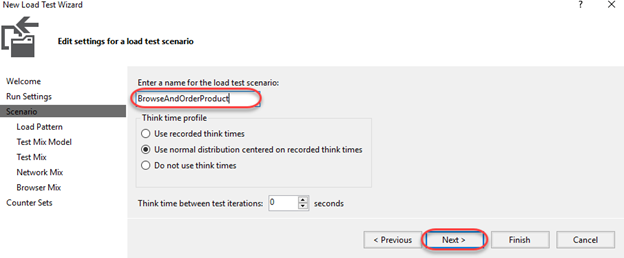

Enter the name “BrowseAndOrderProduct” and click Next. Note that you could optionally configure how think times are applied. Although we entered explicit think times earlier, we can use the default option to add a degree of randomness (normally distributed based on the recorded times) so that each set of requests isn’t exactly the same. Click Next.

-

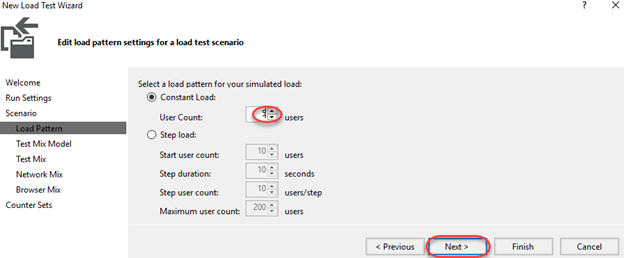

We’ll keep things simple by have a Constant Load of 5 users. However, there are scenarios where you might prefer to have the users scale up over the course of the test to simulate growing traffic. Click Next.

-

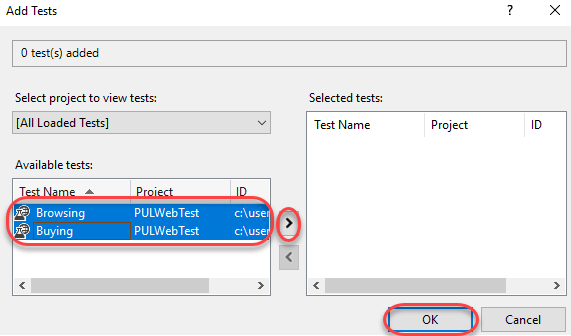

Since the users will vary across tests, you can select an algorithm for modeling how they vary. For example, if they are Based on the total number of tests, this allows you to specify the percentages that each test will be run. This is particularly useful when dealing with a scenario where you find that 1 out of every 4 users who browse the site end up buying something. In that case, you would want a mix of 75% “browsers” and 25% “browse & buyers”. Click Next.

-

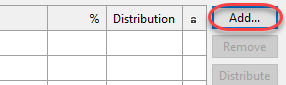

Click Add to select tests to add to the mix.

-

Select the Browsing and Buying tests and add them to the mix. Click OK.

-

Set the relative percentages to 75 and 25. Click Next.

-

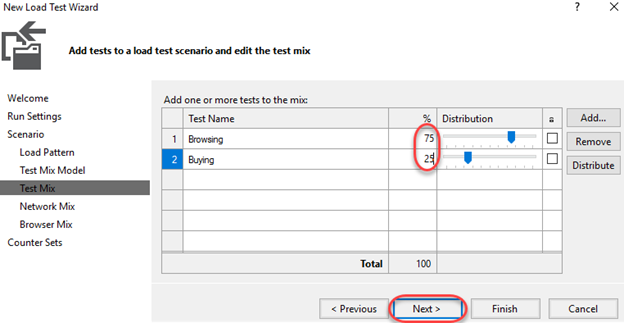

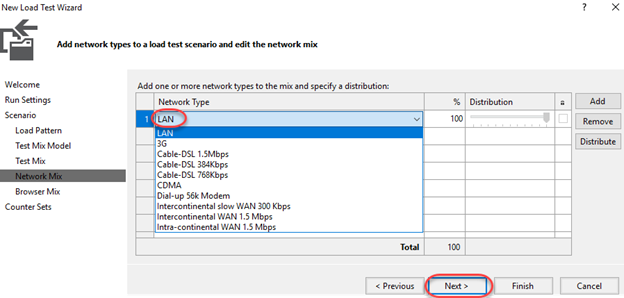

As with the tests, you can configure the network type mix to use when testing. Select LAN for all and click Next.

-

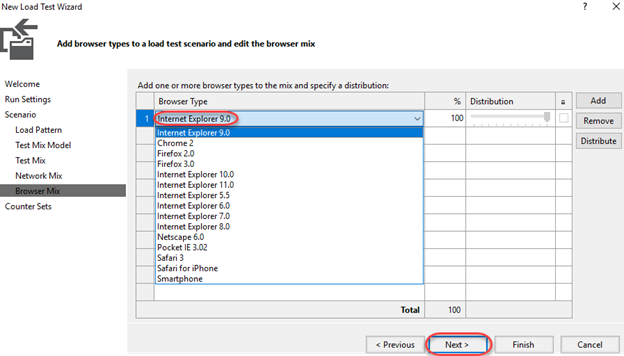

As with network types, you can also specify the mix of browsers. Select Internet Explorer 9.0 for all and click Next.

-

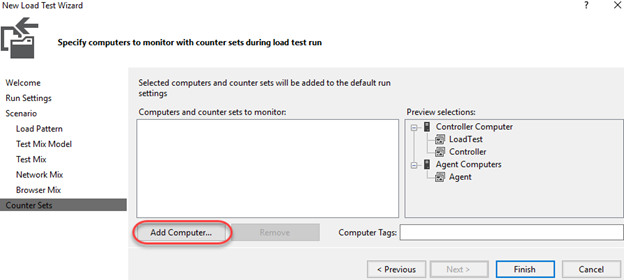

As part of the load testing you can collect performance counters. Click Add Computer to add a computer to the test.

-

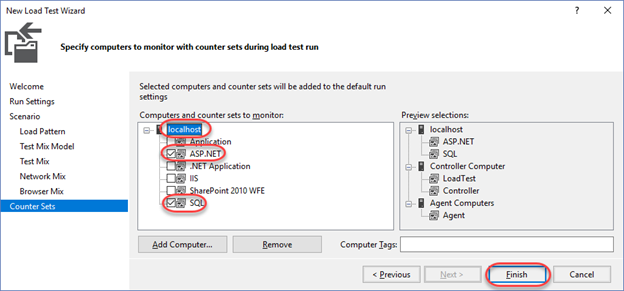

Set the name of the computer to localhost and check ASP.NET and SQL. Click Finish.

-

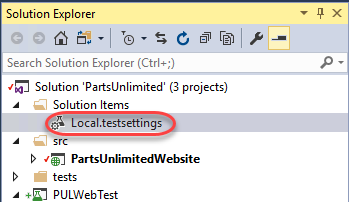

In Solution Explorer, double-click Local.testsettings under Solution Items to open it.

-

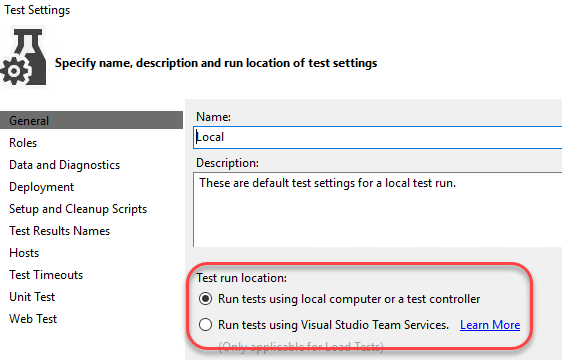

The General tab provides a place for you to update basic info about the test, such as whether it should be run locally or in Azure DevOps.

-

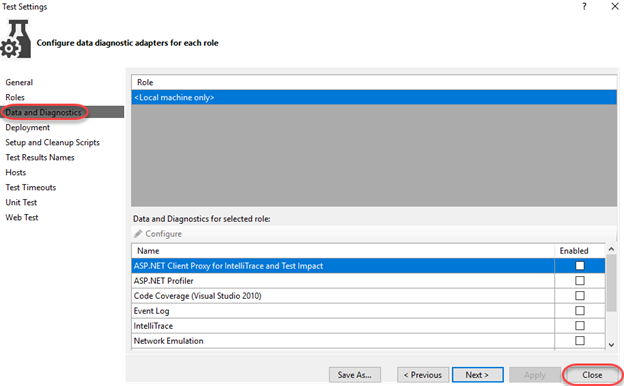

Select the Data and Diagnostics tab to view the available adapters. Options include those for ASP.NET, Event Log, IntelliTrace, Network Emulation, and more. No adapters are selected by default because many of them have a significant impact on the machines under test and can generate a large amount of data to be stored over the course of long load tests.

Task 6: Configuring the test controller

-

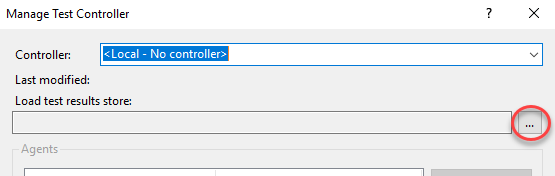

LoadTest1.loadtest should already be open. Click the Manage Test Controllers button.

-

No connection string will be set yet, so click the Browse button to specify one.

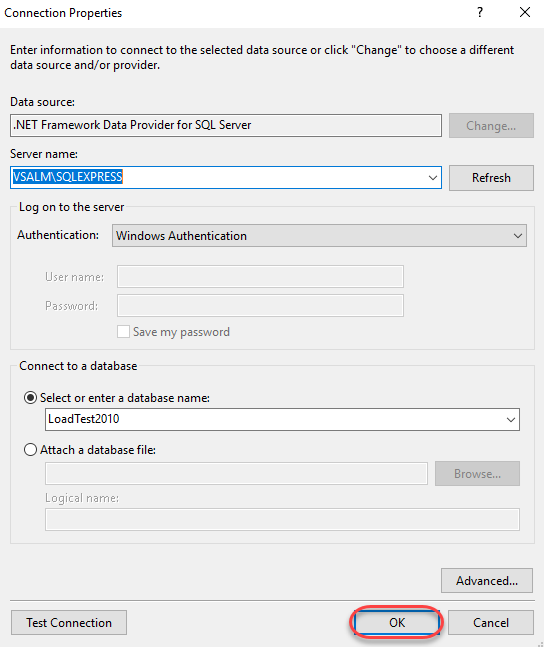

-

Select a local database to use and click OK to save.

-

Press Esc to close the Manage Test Controller dialog.

Task 7: Executing, monitoring, and reviewing load tests

-

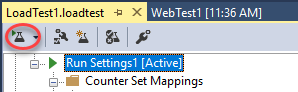

Click the Run Load Test button to begin a load test.

-

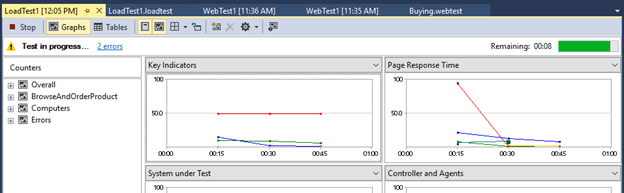

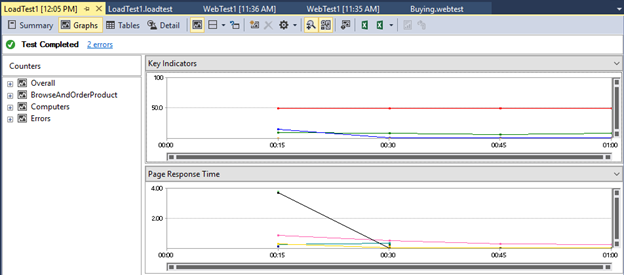

The test will run for one minute, as configured. By default, you should see four panels showing some key statistics, with some key performance counters listed below that. Data is sampled every 15 seconds based on our configuration from earlier.

-

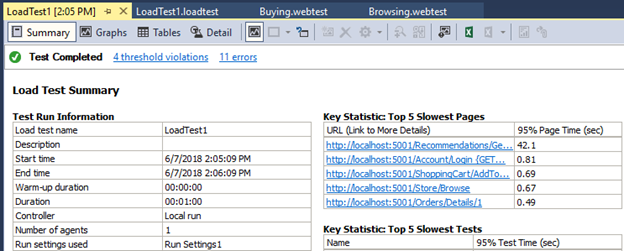

After the load test run finishes, it will automatically switch to the Summary view. The Summary view shows overall aggregate values and other key information about the test. Note that the hyperlinks to specific pages open up even more details in the Tables view.

-

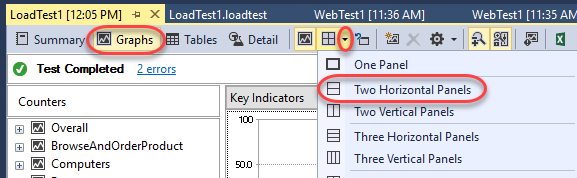

Select the Graphs view and change the layout to use Two Horizontal Panels. The views are very flexible.

-

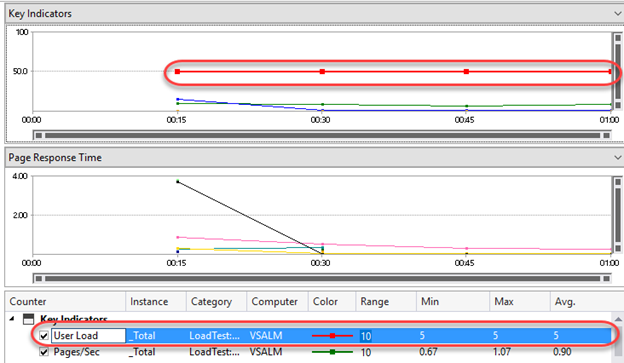

By default, the top graph will show Key Indicators and the bottom graph will show Page Response Time. These are two very important sets of data for any web application.

-

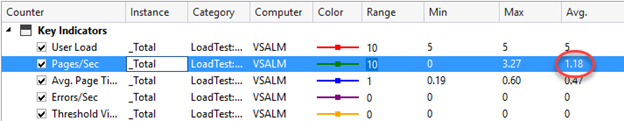

Click one of the Key Indicator graph lines or data points and select it. This will also highlight the counter that it is associated with the below graphs. The red line from the screenshot below represents the User Load at different points during the load test.

-

Click the Pages/Sec row from the Key Indicators section of the counter grid to highlight it in the graph. In the screenshot shown below we can see that the average number of pages per second over the duration of the test was 1.18 (this may vary for you).

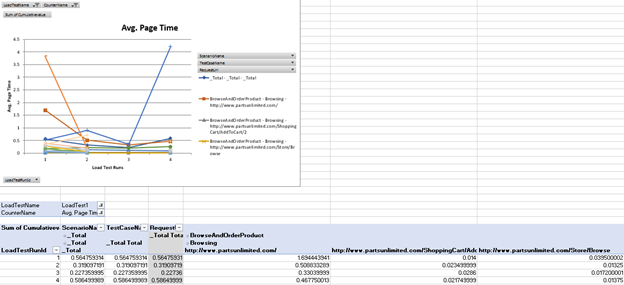

Task 8: Generating and viewing load test trend reports

-

Even though the initial load test may result in some numbers that don’t seem to provide a wealth of information it does provide a good baseline and allow us to make relative measures between test runs to help measure performance impacts of code changes. For example, if we had seen a relatively high level of batch requests per second during our initial load tests, perhaps that could be addressed by adding in some additional caching, and then re-testing to make sure that the request per second goes down.

-

Return to LoadTest1.loadtest and click the Run Load Test button to run the load test again. Now there will be at least two test results to work with so that we can see how to perform some trend analysis. Feel free to run it a few times if you’d like lots of trend data.

-

When the final load test is complete, click the Create Excel Report button from the toolbar to load Excel.

-

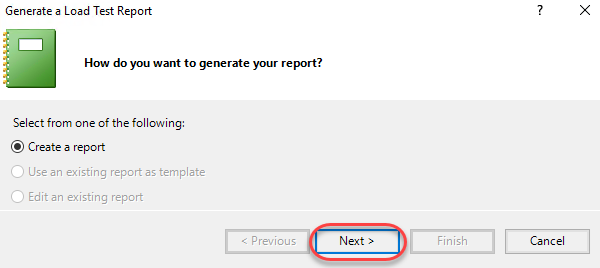

Click Next.

-

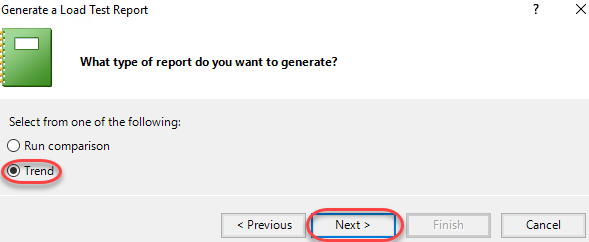

Select Trend and click Next.

-

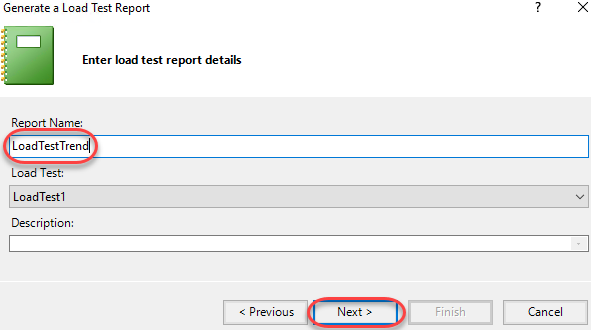

Set the Report Name to “LoadTestTrend” and click Next.

-

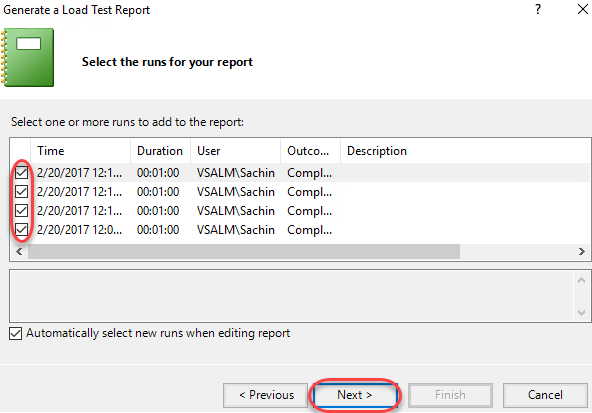

Select all available runs.

-

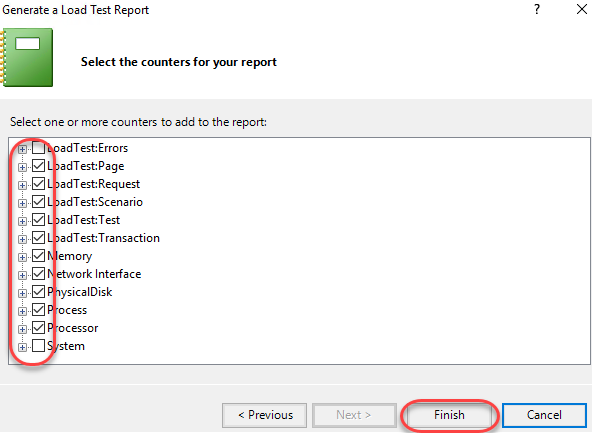

Keep the default performance counters selected and click Finish.

-

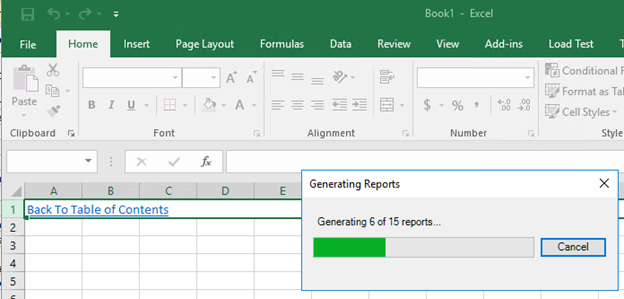

Excel will now generate a thorough report based on the results from the various test runs.

-

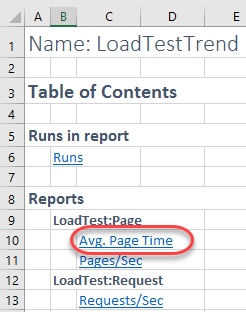

Click Avg. Page Time to view those results.

-

Your report will vary based on the test results and number of tests. However, you can easily see how this would be very useful when analyzing how changes in the solution impacted performance. You would very easily be able to track a performance regression to a time (and build).